Regardless of who you are, either an algo trader hidden in the wilderness of Alaska or an active portfolio manager in a large financial institution in São Paulo, your investments are the subject to sudden and undesired movements in the markets. There is one fear. We know it very well. It’s a low-probability, high-impact event. An extreme loss. An avalanche hitting your account balance hard. Really hard.

The financial risk management has developed a set of quantitative tools helping to rise an awareness of the worst case scenarios. The most popular two are Value-at-Risk (VaR) and Expected Shortfall (ES). Both numbers provide you with the amount of stress you put on your shoulders when the worst takes place indeed.

Quoting $VaR_{0.05}^{\rm 1-day}$ value we assume that in 95% of cases we should feel confident that the maximal loss for our portfolio, $C\times VaR_{0.05}^{\rm 1-day}$, will not be exceed in trading on the next day (1-day VaR) where $C$ is a current portfolio value (e.g. $\$$35,292.13). However if unforeseen happens, our best guess is that we will lose $C\times ES_{0.05}^{\rm 1-day}$ of dollars, where $ES_{0.05}^{\rm 1-day}$ stands for the 1-day expected shortfall estimate. Usually $ES_{0.05}^{\rm 1-day} > VaR_{0.05}^{\rm 1-day}$. We illustrated this case previously across VaR and Expected Shortfall vs. Black Swan article using IBM stock trading data. We also discussed a superbly rare case of extreme-loss in the markets on Oct 19, 1987 (-22%), far beyond any measure.

In this post we will underline an additional quantitative risk measure, quite often overlooked by portfolio managers, an extreme value-at-risk (EVaR). Using Matlab and an exemplary portfolio of stocks, we gonna confront VaR, ES, and EVaR for a better understanding of the amount of risk involved in the game. Hitting hard is inevitable under drastic market swings so you need to know the highest pain threshold: EVaR.

Generalised Extreme-Value Theory and Extreme VaR

The extreme-value theory (EVT) plays an important role in today’s financial world. It provides a tailor-made solution for those who wish to deal with highly unexpected events. In a classical VaR-approach, the risk manager is more concentrated and concerned around central tendency statistics. We are surrounded by them every day. The mean values, standard deviations, $\chi^2$ distributions, etc. They are blood under the skin. The point is they are governed by central limit theorems, which, on a further note, cannot be applied to extremes. That is where the EVT enters the game.

From the statistical point of view, the problem is simple. If we assume that $X$ is random variable of the loss and $X$ is independent and identically distributed (iid) by that we also assume a general framework suggesting us that $X$ comes from an unknown distribution of $F(x)=Pr(X\le x)$. EVT makes use of the fundamental Fisher-Trippett theorem and delivers practical solution for a large sample of $n$ extreme random loss variables knows also as a generalised extreme-value (GEV) distribution defined as:

$$

H(z;a,b) = \left\{

\begin{array}{lr}

\exp\left[-\left( 1+ z\frac{x-b}{a} \right)^{-1/z} \right] & : z \ne 0\\

\exp\left[-\exp\left(\frac{x-b}{a} \right) \right] & : z = 0 \\

\end{array}

\right.

$$ Under the condition of $x$ meeting $1+z(x-b)/a > 0$ both parameters $a$ and $b$ in GEV distribution ought to be understood as location and scale parameters of limiting distribution $H$. Their meaning is close but distinct from mean and standard deviation. The last parameter of $z$ corresponds to a tail index. Tht tail is important in $H$. Why? Simply because it points our attention to the heaviness of extreme losses in the data sample.

In our previous article on Black Swan and Extreme Loss Modeling we applied $H$ with $z=0$ to fit the data. This case of GEV is known as the Gumbel distribution referring to $F(x)$ to have exponential (light) tails. If the underlying data fitted with $H$ model reveal $z<0$, we talk about Fréchet distribution. From a practical point of view, the Fréchet case is more useful as it takes into account heavy tails more carefully. By plotting standardised Gumbel and Fréchet distributions ($a=1$, $b=0$), you can notice the gross mass of the $H$ contained between $x$ values of -2 and 6 what translates into $b-2a$ to $b+6a$ interval. With $z>0$, the tail contributes more significantly in production of very large $X$-values. The remaining case for $z<0$, Weibull distribution, we will leave out of our interest here (the case of tails lighter than normal).

If we set the left-hade side of GEV distribution to $p$, take logs of both sides of above equations and rearrange them accordingly, we will end up with a setup of:

$$

\ln(p) = \left\{

\begin{array}{lr}

-\left(1+zk \right)^{-1/z} & : z \ne 0\\

-\exp(-k) & : z = 0 \\

\end{array}

\right.

$$ where $k=(x-b)/a$ and $p$ denotes any chosen cumulative probability. From this point we can derive $x$ values in order to obtain quantiles associated with $p$, namely:

$$

x = \left\{

\begin{array}{ll}

b – \frac{a}{z} \left[1-(-\ln(p))^{-z}\right] & : ({\rm Fréchet}; z>0) \\

b – a\ln\left[-\ln(p)\right] & : ({\rm Gumbel}; z=0) \\

\end{array}

\right.

$$ Note, when $z\rightarrow 0$ then Fréchet quantiles tend to their Gumbel equivalents. Also, please notice that for instance for the standardised Gumbel distribution the 95% quantile is $-\ln[-\ln(0.95)] = 2.9702$ and points at its location in the right-tail, and not in the left-tail as usually associated with heaviest losses. Don’t worry. Gandhi says relax. In practice we fit GEV distribution with reversed sign or we reverse sign for 0.95th quantile for random loss variables of $x$. That point will become more clear with some examples that follow.

We allow ourselves to define the Extreme Value-at-Risk (EVaR) associated directly with fitted GEV distribution, $H_n$, to the data ($n$ data points), passing

$$

Pr[H_n < H'] = p = {Pr[X < H']}^n = [\alpha]^n

$$ where $\alpha$ is the VaR confidence level associated with the threshold $H'$, in the following way:

$$

\mbox{EVaR} = \left\{

\begin{array}{ll}

b_n - \frac{a_n}{z_n} \left[1-(-n\ln(\alpha))^{-nz_n}\right] & : ({\rm Fréchet}; z>0) \\

b_n – a_n\ln\left[-n\ln(\alpha)\right] & : ({\rm Gumbel}; z=0) \\

\end{array}

\right.

$$ These formulas provide a practical way of estimating VaR based the analysis of the distribution and frequency of extreme losses in the data samples and are meant to work best for very high confidence levels. Additionally, as pointed by Dowd and Hauksson, the EVaR does not undergo the rule of square root of a holding period what is applicable for a standard VaR derived for (log-)normal distributions of return series.

1-day EVaR for Assets in your Portfolio

All right. Time to connect the dots. Imagine, you hold a portfolio of 30 bluechips being a part of Dow Jones Industrial index. Before market opens, regardless of quantity and direction of positions you hold in each of those 30 stocks, you, as a cautious portfolio manager, want to know the estimation of extreme loss that can take place on the next trading day.

You look at last 252 trading days of every stock, therefore you are able to extract a sample of $N=30$ largest losses (one per stock over last 365 calendar days). Given this sample, we can fit it with GEV distribution and find the best estimates for $z, a$ and $b$ parameters. However, in practice, $N=30$ is a small sample. There is nothing wrong to extract for each stock 5 worst losses that took place within last 252 trading days. That allows us to consider $n=150$ sample (see the code below).

Let’s use Matlab and the approach described in Create a Portfolio of Stocks based on Google Finance Data fed by Quandl post, here re-quoted in a shorter form:

% Extreme Value-at-Risk

%

% (c) 2014 QuantAtRisk.com, by Pawel Lachowicz

close all; clear all; clc;

% Read the list of Dow Jones components

fileID = fopen('dowjones.lst');

tmp = textscan(fileID, '%s');

fclose(fileID);

dowjc=tmp{1}; % a list as a cell array

% Read in the list of tickers and internal code from Quandl.com

[ndata, text, alldata] = xlsread('QuandlStockCodeListUS.xlsx');

quandlc=text(:,1); % again, as a list in a cell array

quandlcode=text(:,3) % corresponding Quandl's Price Code

% fetch stock data for last 365 days

date2=datestr(today,'yyyy-mm-dd') % from

date1=datestr(today-365,'yyyy-mm-dd') % to

stockd={};

% scan the Dow Jones tickers and fetch the data from Quandl.com

for i=1:length(dowjc)

for j=1:length(quandlc)

if(strcmp(dowjc{i},quandlc{j}))

fprintf('%4.0f %s\n',i,quandlc{j});

fts=0;

[fts,headers]=Quandl.get(quandlcode{j},'type','fints', ...

'authcode','YourQUANDLtocken',...

'start_date',date1,'end_date',date2);

cp=fts2mat(fts.Close,0); % close price

R{i}=cp(2:end)./cp(1:end-1)-1; % return-series

end

end

end

N=length(dowjc);

where make use of external files: dowjones.lst (tickers of stocks in our portfolio) and QuandlStockCodeListUS.xlsx (see here for more details on utilising Quandl.com‘s API for fetching Google Finance stock data).

The Matlab’s cell array of $R$ holds 30 return-series (each 251 point long). Having that, we find a set of top 5 maximal daily losses for each stock ending up with a data sample of $n=150$ points as mentioned eariler.

Rmin=[];

for i=1:N

ret=sort(R{i});

Mn=ret(1:5); % extract 5 worst losses per stock

Rmin=[Rmin; Mn];

end

Now, we fit the GEV distribution,

$$

H(z;a,b) = \exp\left[-\left( 1+ z\frac{x-b}{a} \right)^{-1/z} \right] \ \ ,

$$ employing a ready-to-use function, gevfit, from Matlab’s Statistics Toolbox:

% fit GEV distribution [parmhat,parmci] = gevfit(Rmin) z=parmhat(1); % tail index a=parmhat(2); % scale parameter b=parmhat(3); % location parameter

which returns for date2 = 2014-03-01,

parmhat = -0.8378 0.0130 -0.0326 parmci = -0.9357 0.0112 -0.0348 -0.7398 0.0151 -0.0304

id est, the best estimates of the model’s parameters:

$$

z_{150} = -0.8378^{+0.0980}_{-0.0979}, \ \ a_{150} = 0.0130^{+0.0021}_{-0.0018}, \ \ b_{150} = -0.0326^{+0.0022}_{-0.0022} \ \ .

$$ At this point, it is essential to note that negative $z$ indicates Fréchet distribution since we fitted data with negative signs.

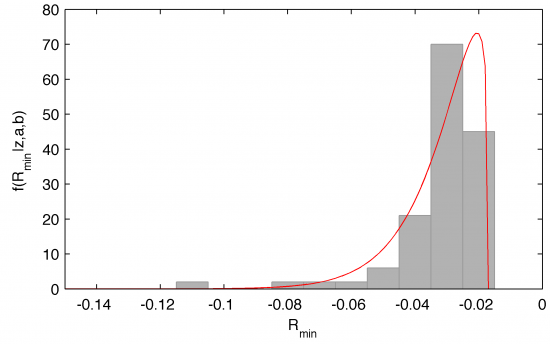

Plotting data with model,

% plot data with the GEV distribution for found z,a,b

x=-1:0.001:1;

hist(Rmin,-1:0.01:0);

h=findobj(gca,'Type','patch');

set(h,'FaceColor',[0.7 0.7 0.7],'EdgeColor',[0.6 0.6 0.6]);

h=findobj(gca,'Type','box');

set(h,'Color','k');

% GEV pdf

pdf1=gevpdf(x,z,a,b);

y=0.01*length(Rmin)*pdf1;

line(x,y,'color','r'); box on;

xlabel('R_{min}');

ylabel(['f(R_{min}|z,a,b)']);

xlim([-0.15 0]);

illustrates our efforts in a clear manner:

Eventually, performing derivation,

z=-z; EVaR=b-(a/z)*(1-(-n*log(0.95))^(-n*z))

we calculate the best estimation of 1-day EVaR as discussed:

$$

\mbox{EVaR} =

b_{150} – \frac{a_{150}}{-z_{150}} \left[1-(-n\ln(0.95))^{nz_{150}}\right] = -0.0481 \ \ .

$$ This number tells us that among 30 bluechips in our portfolio we should expect extreme loss of 4.81% on the following day (estimated using last 252 days of trading history).

Now, is 4.81% a lot or not? That reminds me an immortal question asked by one of my colleagues many years ago, a guy who loved studying philosophy: Is 4 kilograms of potatoes a lot or not? The best way to address it, is by comparison. Let us again quickly look at return series of 30 stocks in our portfolio (R cell array in the code). For each individual series we derive its normalised distribution (histogram), fit it with a normal probability density function, and calculate corresponding 95% VaR (var local variable) in the following way:

%--figure;

VaR=[];

for i=1:N

rs=R{i};

% create the histogram for return series

[H,h]=hist(rs,100);

sum=0;

for i=1:length(H)-1

sum=sum+(H(i)*(h(i+1)-h(i)));

end

H=H/sum;

%--bar(h,H,'FaceColor',[0.7 0.7 0.7],'EdgeColor',[0.7 0.7 0.7])

% fit the histogram with a normal distribution

[muhat,sigmahat] = normfit([rs]');

Y=normpdf(h,muhat(1),sigmahat(1));

%--hold on; plot(h,Y,'b-'); % and plot the fit using a blue line

% find VaR for stock 'i'

sum=0;

i=1;

while (i<length(h))

sum=sum+(Y(i)*(h(i+1)-h(i)));

if(sum>=0.05)

break;

end

i=i+1;

end

var=h(i)

%--hold on; plot(h(i),0,'ro','markerfacecolor','r'); % mark VaR_fit

VaR=[VaR; var];

end

The vector of VaR then holds all thirty 95% VaR. By running the last piece of code,

figure;

[H,h]=hist(VaR*100,20);

line=[EVaR*100 0; EVaR*100 6];

bar(h,H,'FaceColor',[0.7 0.7 0.7],'EdgeColor',[0.7 0.7 0.7])

hold on; plot(line(:,1),line(:,2),'r:');

xlim([-6 0]); ylim([0 6]);

xlabel('95% VaR [percent]'); ylabel('Histogram');

we plot their distribution and compare them to EVaR of -4.81%, here marked by red dotted line in the chart:

The calculated 1-day 95% EVaR of -4.81% places itself much further away from all classical 95% VaR derived for all 30 stocks. It’s an extreme case indeed.

You can use 1-day EVaR to calculate the expected loss in your portfolio just by assuming that every single stock will drop by -4.81% on the next day. This number (in terms of currency of your portfolio) for sure will make you more alert.

Richard Branson once said: If you gonna fail, fail well. Don’t listen to him.

4 comments

I am just exploring Quant-at-Risk and think you are doing excellent work. Your use of MatLab code to illustrate computations is awesome.

I have long been a critic of extreme risk metrics, such as expected shortfall, extreme value-at-risk and even high-quantile, long-horizon value-at-risk. I hope you won’t mind me inserting a caution that backtesting such risk measures is problematic. Before you use a particular extreme risk measure, ask yourself how you are going to backtest it. If you are uncomfortable with your answer to that question, don’t use that risk measure.

Glyn, thanks for so warm words of appreciation! Your question is justified. No doubt. I address this topic, firstly, to turn a global awareness on those risk measure, secondly, to do all possible efforts to seize the “impossible to seize”. For years I read hundreds of comments of those who only talk but don’t dare to take an active approach to the understanding of the highest risks. I study the data. They can tell a lot. It’s all about how persistent you are.

A masterpiece! Great job Pawel. Love to read your posts.

Excellent post Pawel! Very practical with understandable example and code. Thank you for shearing your knowledge.